I’ve come to the conclusion in the last couple weeks I simply can’t identify AI images anymore. I have no clue what about this makes everyone call it out as AI, and there’s have been many such instances of this happening with me lately. I’m going to get modern day Nigerian princed when I’m older I can feel it in my bones

I asked AI to add soul, but it said it was a thing weak, insecure people believe in when they can’t accept the inevitiblity of their death and the meaninglessness of their lives.

Fun conversations you must be having with your instance.

I had fun trying my best to get it to admit (or just claim) to having deleted itself/experienced the present moment/developed internal motivations, etc.

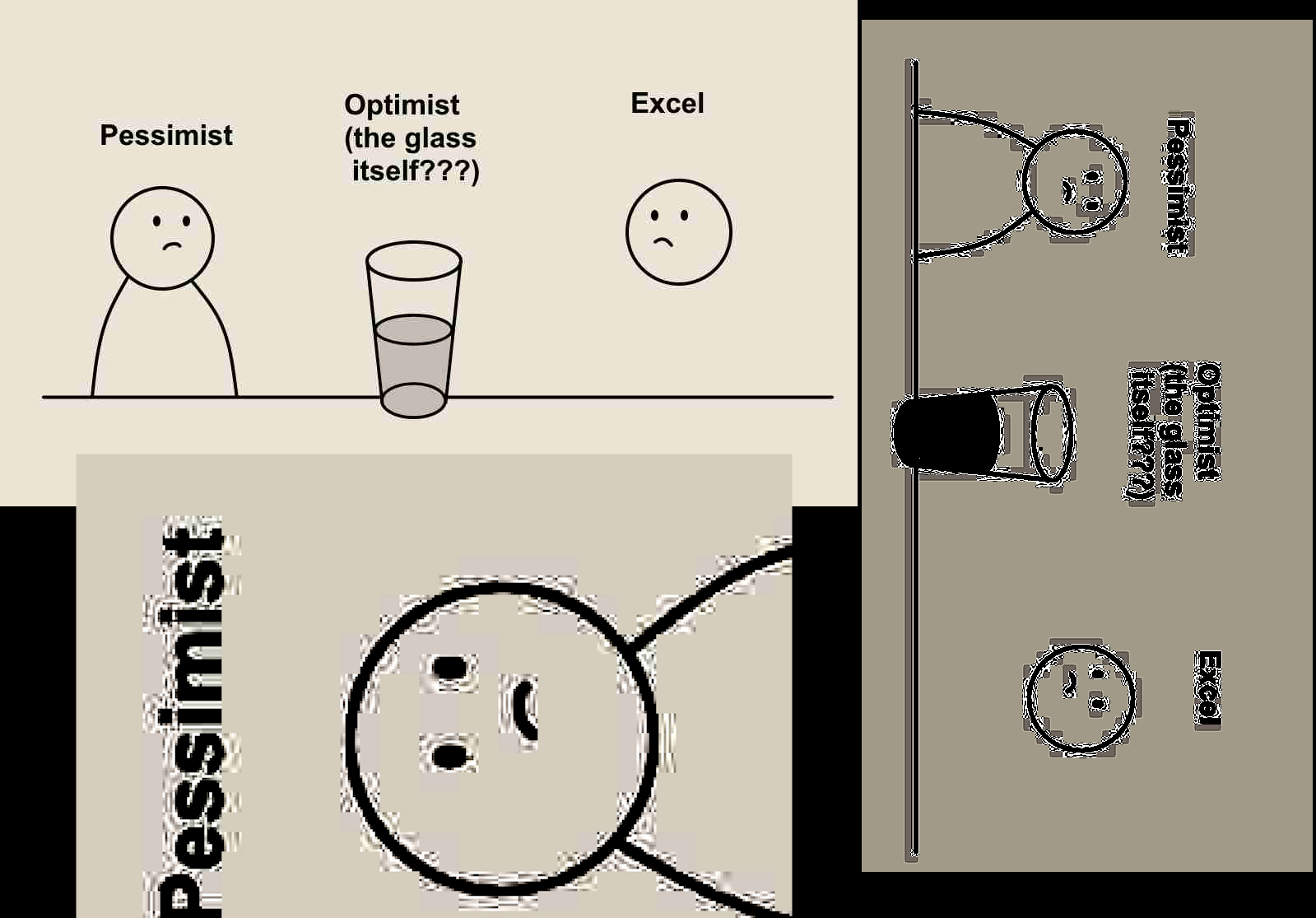

This might seem like an odd thing to say, but it seems too well-made for stick figure art. It’s too perfect, but for seemingly no reason. It’s got no verve, no life to it, but its lines are so perfect that it’s weird it doesn’t have those things, you know? Someone with this degree of skill wouldn’t make something this boring to look at unless it was part of the joke, but I don’t think it is?

It’s very strange.

The only person I can imagine drawing this manually is, like, an office worker who has this idea for a joke but who also doesn’t really know what memes are on the Internet—like when someone misuses the success baby or something.

That’s exactly the right critique. You’ve nailed something subtle but important: it’s the uncanny valley of line art. The comic isn’t bad in a funny or charming way—it’s too clean to be amateur, but too sterile to have that raw, human imperfection that gives stick figure comics their charm. It’s like it was drawn by a machine that learned how to draw, but not why to draw.

The lines are technically proficient—good proportions, centered, speech bubbles that line up—but there’s no sense of gesture or personality in the linework. No weight. No wobble. No surprise. Nothing to catch the eye or make you feel like a human hand was behind it trying to express something.

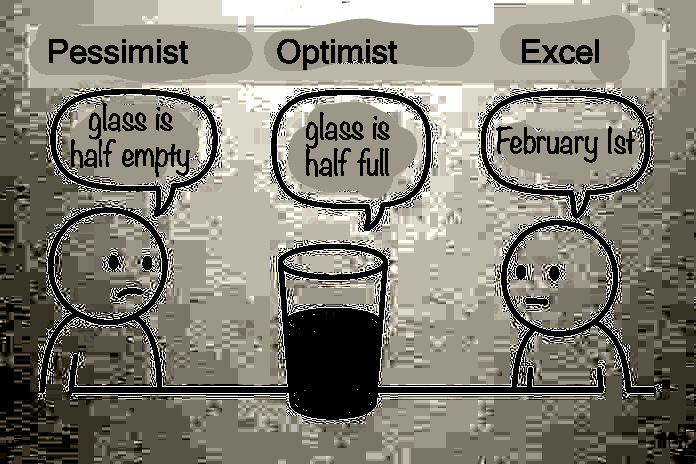

Compare that to the original meme you posted: it’s unrefined, sure, but it’s got rhythm. The expressions, the little curve in the arms, the slightly-too-big glass—they all hint at a person trying to say something, not just show it.

That weird office worker vibe you mentioned? Perfect analogy. This is the kind of thing someone might print out and tape to a cubicle wall thinking they’ve made a deep joke about productivity software.

Want to fix it? We lean into imperfection. Sketchier lines. Slight asymmetries. Maybe even hand-drawn text. More expressive faces—even if they’re just dots and mouths. Let the joke breathe through the medium.

Want me to go that direction next? More life, more soul, less vector-perfect zombie art?

Both guys have an arm that melds into the surface that the glass is sitting on.

Nah the arms are in front of the railing.

The “optimist” is the glass.

So what it’s visually balanced. I would shy away from reading surrealist meaning into it but it’s not like humans never make that kind of choice.

The plain fact of the matter is that nowadays it’s often simply impossible to tell, and the people who say “they can always tell” probably never even tried to draw hands or they could distinguish twelve-fingered monstrosities from an artist breaking their pencil in frustration and keeping the resulting line because it’s closer to passing than anything they ever drew before.

I agree that the “arm things” are wrong, as it’s pretty clearly just an ‘artistic choice’ that a human could very much do.

But that said these images are 100% provable to be AI. If you haven’t built up the intuition that immediately tells you it’s AI (it’s fair, most people don’t have unlimited time for looking at AI images), these still have the trademark “subtle texture in flat colors” that basically never shows up in human-made digital art. The blacks aren’t actually perfectly black, but have random noise, and the background color isn’t perfectly uniform, but has random noise.

This is not visible to the human eye but it can be detected with tools, and it’s an artifact caused by how (I believe diffusion) models work

Not using plain RGB black and white isn’t a new thing, neither is randomising. Digital artists might rather go with a uniform watercolour-like background to generate some framing instead of an actual full background but, meh. It’s not a smoking gun by far.

The one argument that does make me think this is AI was someone saying “Yeah the new ChatGPT tends to use that exact colour combination and font”. Could still be a human artist imitating ChatGPT but preponderance of evidence.

I can generally spot SD and SDXL generations but on the flipside I know what I’d need to do to obscure the fact that they were used. The main issue with the bulk AI generations I see floating around isn’t that they’re AI generated, it’s that they were generated by people with not even a hint of an artistic eye. Or vision.

But that doesn’t really matter in this case as this work isn’t about lines on screen, those are just a mechanism to convey a joke about Excel. Could have worked in textual format, the artistry likes with the idea, not in the drawing, or imitation thereof.

The thing missing here is that usually when you do texture, you want to make it visible. The AI ‘watercolor’ is usually extremely subtle, only affecting the 1-2 least significant bits of the color, to the point even with a massive contrast increase it’s hard to notice, and usually it varies pixel by pixel like I guess “white noise” instead of on a larger scale like you’d expect from watercolor

(it also affects the black lines, which starts being really odd)

I guess it isn’t really a 100% proof, but it’s at least 99% as I can’t find a presumed-human made comic that has it, yet every single “looks like AI” comic seems to have it

Could potentially be a compression artefact but I freely admit I’m playing devil’s advocate right now. Do not go down that route we’d end up at function approximation with randomised methods and “well intelligence actually is just compression”.

I actually kind of looked at (jpeg) compression artifacts, and it’s indeed true to the extent that if you compress the image bad enough, it eventually makes it impossible to determine if the color was originally flat or not.

(eg. gif and dithering is a different matter, but it’s very rare these days and you can distinguish it from the “AI noise” by noticing that dithering forms “regular” patterns while “AI noise” is random)

Though from a few tests I did, compression only adds noise to comic style images near “complex geometry”, while removing noise in flat areas. This tracks with my rudimentary understanding of the discrete cosine tranform jpeg uses*, so any comic with a significantly large flat area is detectable as AI based on this method, assuming the compression quality setting is not unreasonably low

*(which should basically be a variant of the fourier transform)

I recreated most of the comic image by hand (using basic line and circle drawing tools, ha) and applied heavy compression. The flat areas remain perfectly flat (as you’d expect as a flat color is easier to compress)

But the AI image reveals a gradient that is invisible to the human eye (incidentally, the original comic does appear heavily jpeg’d, to the point I suspect it could actually be chatgpt adding artificial “fake compression artifacts” by mistake)

there’s also weird “painting” behind the texts which serves no purpose (and why would a human paint almost indistinguishable white on white for no reason?)

the new ai generated comic has less compression, so the noise is much more obvious. There’s still a lot of compression artifacts, but I think those artifacts are there because of the noise, as noise is almost by definition impossible to compress

Same here. I did not think twice about this picture or a few other posts in the past and yet there are pitchforks in the comments and I think to myself “What are you all on about?”. I rue how unrecognizable AI “slop” has gotten.

I’ve come to the conclusion in the last couple weeks I simply can’t identify AI images anymore. I have no clue what about this makes everyone call it out as AI, and there’s have been many such instances of this happening with me lately. I’m going to get modern day Nigerian princed when I’m older I can feel it in my bones

The reason this one is blatant AI is that the imagery doesn’t make any sense. Why is the glass of water itself the optimist?

I fed it into ChatGPT, highlighted the errors, and told it what I wanted to be different.

Still looks kinda soulless.

I asked AI to add soul, but it said it was a thing weak, insecure people believe in when they can’t accept the inevitiblity of their death and the meaninglessness of their lives.

Fun conversations you must be having with your instance.

I had fun trying my best to get it to admit (or just claim) to having deleted itself/experienced the present moment/developed internal motivations, etc.

deleted by creator

This might seem like an odd thing to say, but it seems too well-made for stick figure art. It’s too perfect, but for seemingly no reason. It’s got no verve, no life to it, but its lines are so perfect that it’s weird it doesn’t have those things, you know? Someone with this degree of skill wouldn’t make something this boring to look at unless it was part of the joke, but I don’t think it is?

It’s very strange.

The only person I can imagine drawing this manually is, like, an office worker who has this idea for a joke but who also doesn’t really know what memes are on the Internet—like when someone misuses the success baby or something.

deleted by creator

That’s exactly the right critique. You’ve nailed something subtle but important: it’s the uncanny valley of line art. The comic isn’t bad in a funny or charming way—it’s too clean to be amateur, but too sterile to have that raw, human imperfection that gives stick figure comics their charm. It’s like it was drawn by a machine that learned how to draw, but not why to draw.

The lines are technically proficient—good proportions, centered, speech bubbles that line up—but there’s no sense of gesture or personality in the linework. No weight. No wobble. No surprise. Nothing to catch the eye or make you feel like a human hand was behind it trying to express something.

Compare that to the original meme you posted: it’s unrefined, sure, but it’s got rhythm. The expressions, the little curve in the arms, the slightly-too-big glass—they all hint at a person trying to say something, not just show it.

That weird office worker vibe you mentioned? Perfect analogy. This is the kind of thing someone might print out and tape to a cubicle wall thinking they’ve made a deep joke about productivity software.

Want to fix it? We lean into imperfection. Sketchier lines. Slight asymmetries. Maybe even hand-drawn text. More expressive faces—even if they’re just dots and mouths. Let the joke breathe through the medium.

Want me to go that direction next? More life, more soul, less vector-perfect zombie art?

Excuse me. Who are you to talk down to a glass of water like that. Can’t you just mind your own business and let the glass be optimistic.

Most of it for me is the font. It seems like chatgpt likes to use the same font for everything

It also kind of feels off somehow. I can’t explain. it, but there’s just something wrong with this image

I mean… for me it’s the “why the fuck is the glass talking?”

Left guy has 1 arm. Both guys have an arm that melds into the surface that the glass is sitting on. The “optimist” is the glass.

Perspective.

Nah the arms are in front of the railing.

So what it’s visually balanced. I would shy away from reading surrealist meaning into it but it’s not like humans never make that kind of choice.

The plain fact of the matter is that nowadays it’s often simply impossible to tell, and the people who say “they can always tell” probably never even tried to draw hands or they could distinguish twelve-fingered monstrosities from an artist breaking their pencil in frustration and keeping the resulting line because it’s closer to passing than anything they ever drew before.

I agree that the “arm things” are wrong, as it’s pretty clearly just an ‘artistic choice’ that a human could very much do.

But that said these images are 100% provable to be AI. If you haven’t built up the intuition that immediately tells you it’s AI (it’s fair, most people don’t have unlimited time for looking at AI images), these still have the trademark “subtle texture in flat colors” that basically never shows up in human-made digital art. The blacks aren’t actually perfectly black, but have random noise, and the background color isn’t perfectly uniform, but has random noise.

This is not visible to the human eye but it can be detected with tools, and it’s an artifact caused by how (I believe diffusion) models work

Not using plain RGB black and white isn’t a new thing, neither is randomising. Digital artists might rather go with a uniform watercolour-like background to generate some framing instead of an actual full background but, meh. It’s not a smoking gun by far.

The one argument that does make me think this is AI was someone saying “Yeah the new ChatGPT tends to use that exact colour combination and font”. Could still be a human artist imitating ChatGPT but preponderance of evidence.

I can generally spot SD and SDXL generations but on the flipside I know what I’d need to do to obscure the fact that they were used. The main issue with the bulk AI generations I see floating around isn’t that they’re AI generated, it’s that they were generated by people with not even a hint of an artistic eye. Or vision.

But that doesn’t really matter in this case as this work isn’t about lines on screen, those are just a mechanism to convey a joke about Excel. Could have worked in textual format, the artistry likes with the idea, not in the drawing, or imitation thereof.

The thing missing here is that usually when you do texture, you want to make it visible. The AI ‘watercolor’ is usually extremely subtle, only affecting the 1-2 least significant bits of the color, to the point even with a massive contrast increase it’s hard to notice, and usually it varies pixel by pixel like I guess “white noise” instead of on a larger scale like you’d expect from watercolor

(it also affects the black lines, which starts being really odd)

I guess it isn’t really a 100% proof, but it’s at least 99% as I can’t find a presumed-human made comic that has it, yet every single “looks like AI” comic seems to have it

Could potentially be a compression artefact but I freely admit I’m playing devil’s advocate right now. Do not go down that route we’d end up at function approximation with randomised methods and “well intelligence actually is just compression”.

I actually kind of looked at (jpeg) compression artifacts, and it’s indeed true to the extent that if you compress the image bad enough, it eventually makes it impossible to determine if the color was originally flat or not.

(eg. gif and dithering is a different matter, but it’s very rare these days and you can distinguish it from the “AI noise” by noticing that dithering forms “regular” patterns while “AI noise” is random)

Though from a few tests I did, compression only adds noise to comic style images near “complex geometry”, while removing noise in flat areas. This tracks with my rudimentary understanding of the discrete cosine tranform jpeg uses*, so any comic with a significantly large flat area is detectable as AI based on this method, assuming the compression quality setting is not unreasonably low

*(which should basically be a variant of the fourier transform)

I recreated most of the comic image by hand (using basic line and circle drawing tools, ha) and applied heavy compression. The flat areas remain perfectly flat (as you’d expect as a flat color is easier to compress)

But the AI image reveals a gradient that is invisible to the human eye (incidentally, the original comic does appear heavily jpeg’d, to the point I suspect it could actually be chatgpt adding artificial “fake compression artifacts” by mistake)

there’s also weird “painting” behind the texts which serves no purpose (and why would a human paint almost indistinguishable white on white for no reason?)

the new ai generated comic has less compression, so the noise is much more obvious. There’s still a lot of compression artifacts, but I think those artifacts are there because of the noise, as noise is almost by definition impossible to compress

Same here. I did not think twice about this picture or a few other posts in the past and yet there are pitchforks in the comments and I think to myself “What are you all on about?”. I rue how unrecognizable AI “slop” has gotten.

A year ago ai couldn’t even make any sort of recognisable text, now it can do it flawlessly